The scientific method of measuring marketing effectiveness

As seasoned marketers, I’m sure you’ll be familiar with the quote (often attributed to John Wanamaker):

“Half the money I spend on advertising is wasted; the trouble is I don't know which half”

The ability to effectively measure marketing effectiveness is the Holy Grail for CMOs and marketing leaders. With so many established, new and emerging channels at our disposal, it’s more important than ever to identify and prioritize those that drive the most value for our businesses.

Download our Business Resource – Managing customer data to improve marketing ROI

This guide will explain the key aspects of understanding your data and improving how it is managed and used.

Access the

Many marketers believe that evaluating the impact of marketing is straightforward - simply compare the outcomes from one campaign to another and look at which channels generated value.

However, simple or even more complex attribution modelling doesn’t always give the right credit to different types of advertising and marketing. Last touch attribution ignores different influencers on the path to conversion, whilst multi-touch attribution can give too much credit to low-value touches at the start and end of the measurement period.

The advantages of incremental measurement

Some of my fondest memories from school involved the experiments we conducted in science class. Whether we were testing magnetic fields, chemicals or plants, our experiments always had five steps:

- Question(s)

- Observation

- Hypothesis

- Method

- Results

Following the scientific method ensures that experiments can be both easily repeated and the results accepted. As part of the method step of the process, the ‘thing’ being tested should be broken into two groups:

- Treatment - Receives the treatment or intervention, usually manipulation of the independent variable.

- Control - Receives no treatment or intervention, or receives standard treatment that can be understood as a baseline.

Experiments involving treatment and control groups represent the scientific gold standard in finding out what works. By comparing results between treatment and control groups, we can make a much more accurate evaluation of the value specific marketing and advertising activities deliver. This approach gives us the incremental value by measuring what a campaign adds to revenue.

However, poorly designed experiments can lead to misallocations of budgets, especially if too much weight is placed on a single set of results. The importance of having a very clear question and hypothesis is crucial, as well as a series of repeatable experiments, before making a final decision on what may or may not be effective.

The components of a controlled experiment

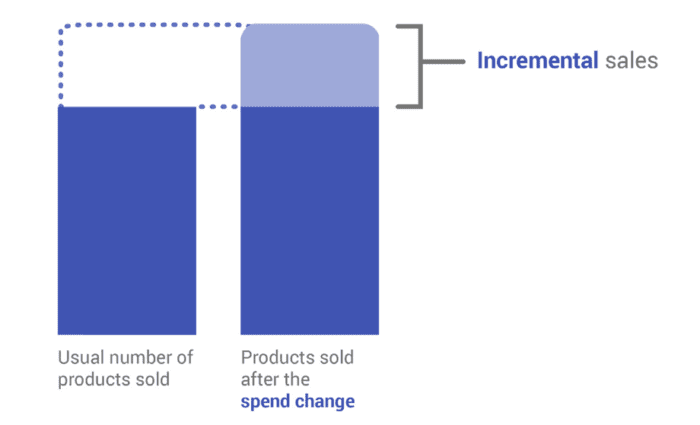

The main difference between results generated via scientific experiments and other forms of measurement is the ability to identify incremental impact. Incremental uplift demonstrates the performance of a specific channel being tested in a more meaningful way. Rather than showing how many products your target audience bought, incremental uplifts show how many more products were purchased because of the marketing activity the audience was exposed to as a result of a change in media spend.

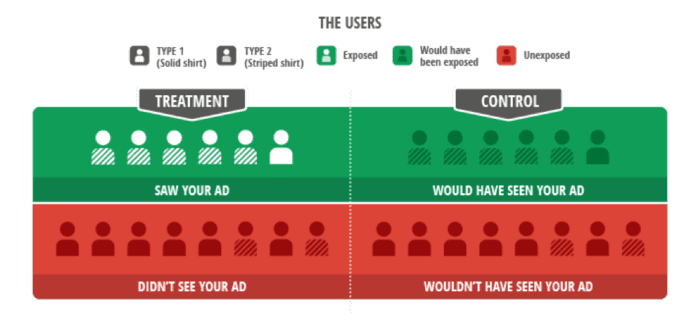

A treatment and control experiment has two main features:

- A clearly defined target group

- Control over who will be exposed to the marketing activity

A marketing campaign will never reach all users within the target audience because exposure to an ad, search listing or email will depend on people’s individual behaviour, numerous targeting parameters and competitor bidding.

This means that the people reached will differ from those that are not reached. To measure effectiveness, we need to make a comparison: "Did people who saw our ad change their behaviour, relative to not seeing it?"

In order to do this, we must randomly divide our target audience into two groups: those that are exposed (treatment) and those that are not (control).

This test and control framework establishes a baseline so we can clearly identify the impact of our marketing efforts. Without this framework, it’s easy for results to be ambiguous or inconclusive.

Bear in mind, however, that there are many factors that can affect the results of an experiment. Don’t assume that email or social don't work because one experiment performed below expectations. Even if the treatment and control groups and targeting were set up correctly, the experiment may have failed because the creative messaging or proposition wasn’t effective. One test is never enough, so run a series of tests with different variables.

Five steps to creating an effective experiment

A controlled experiment will be an experiment itself if it’s the first time you’ve attempted this way of measuring marketing effectiveness. So take your time and give yourself permission to test and learn. Here are five steps to follow:

1. Set out your business goals and performance metrics

Ensure that tests are aligned to the broader business goals you’re looking to influence. If your company is looking to drive committed customers, testing direct and one-to-one communication might be the focus. You might, therefore, look to test the targeting and creative as part of your mail and email communications.

2. Ask a clear question

Once you’ve thought through your business goals, ask a clear question about what you’re looking to achieve. Start with an objective:

“To understand if narrowing our targeting to the 25-40 demographic for email drives incremental newsletter sign-ups”

And a hypothesis:

“Changing our targeting parameters to reach a narrower audience base (from 20 - 50 to 25 - 40) will improve relevancy and increase CTR for newsletter sign-ups.”

3. Develop a media plan

Develop a solid media plan in response to the objective and hypothesis of the test. Define the types of media you plan to use and test to achieve your objectives.

4. Design the experiment

Design your experiment with all the right details:

- Set Parameters

- Outline measurement period

- Include confidence intervals

- Define clear treatment and control groups

- Ensure you employ random selection for treatment and control groups

5. Test and learn

It will take more than one attempt to evaluate the effectiveness of any given channel. Take what you’ve learned from your first experiment, make adjustments and test again.

Conclusion: the benefits of controlled marketing experiments

There are three main benefits to running controlled marketing experiments:

- Hypothesis-driven - Experimental design brings goal-setting to the front of the process.

- Gold standard of measurement - A valid, controlled experiment will tell you whether marketing on a specific platform creates incremental value. Other measurement methods (e.g. attribution) are useful but depend on assumptions and correlations.

- Simple to understand - Controlled experiments are transparent and easy to understand. Marketers at any level can understand the results.